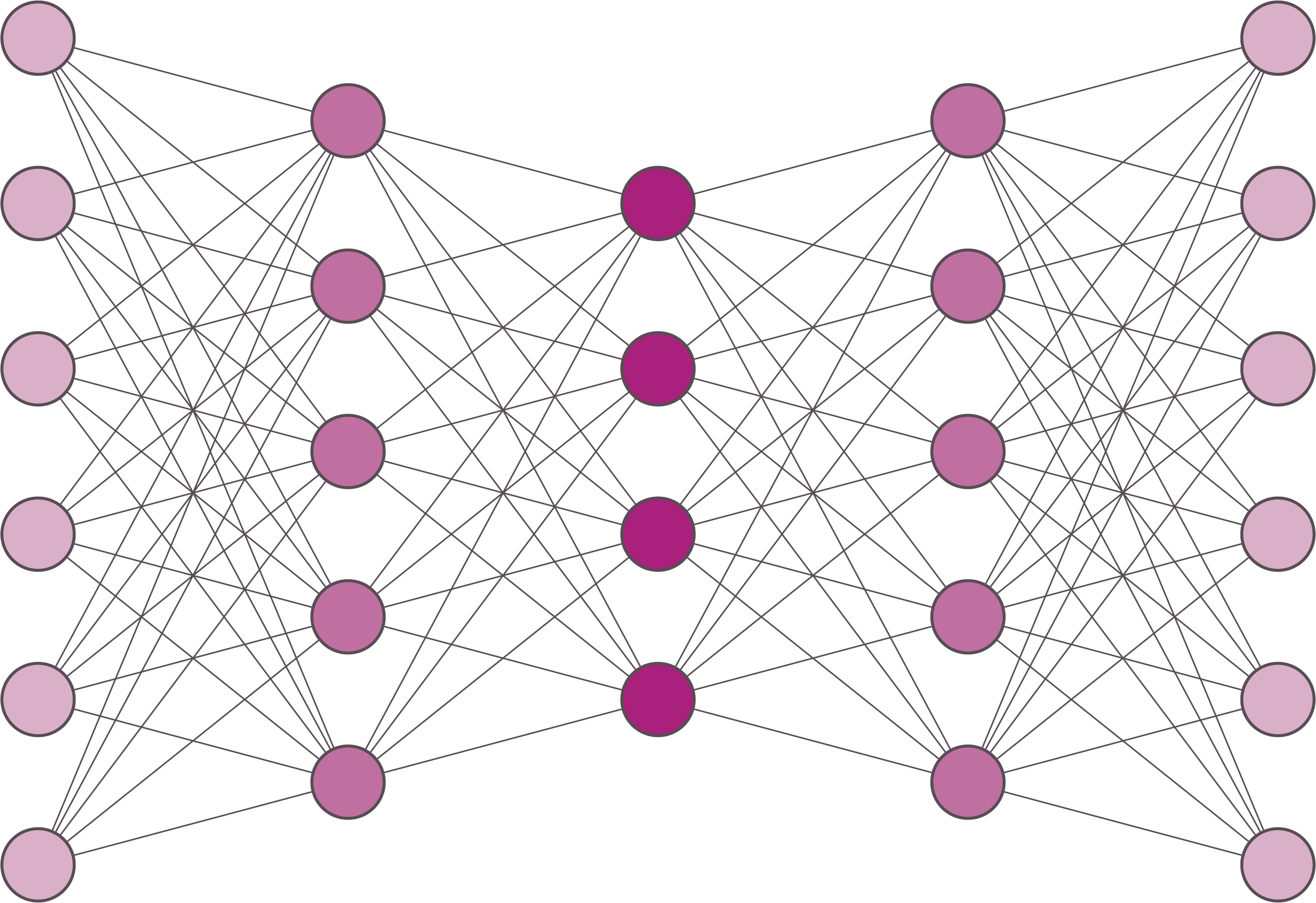

Language models like GPT-4, trained on vast amounts of text data, are designed to predict the next word in a sequence based on the context provided by the preceding words. Their underlying architecture, based on neural networks, doesn't inherently "understand" information but rather recognizes patterns in the data on which it was trained.

Hallucinations in Large Language Models:

Hallucinations refer to instances where a language model generates information that is not grounded in factual or coherent context. These can occur for several reasons:

- Ambiguity: Given ambiguous prompts, the model may infer a context that the user didn’t intend, leading to outputs that seem out of place.

- No Definitive Answer: For queries that don't have a single correct response, the model might provide an answer that appears plausible but is fabricated based on the patterns it has seen.

- Overfitting: If the model overfits to certain patterns or quirks in the training data, it can produce information that's not universally accurate.

- Lack of Grounded Truth: Unlike a structured database, where every piece of information has a definitive source or reference, neural models generate responses based on patterns, not fixed facts. Thus, there's no guarantee that a statement made by a model is verifiable.

The Hybrid Approach: Incorporating Knowledge Graphs

Knowledge graphs provide a structured representation of factual information. Nodes in the graph represent entities (like people, places, or things) and the edges between them signify relationships.

By integrating a large language model with a knowledge graph, there are several potential benefits:

- Fact-Checking: Before a response is given, it can be cross-referenced with the knowledge graph to ensure its accuracy.

- Contextual Awareness: Knowledge graphs provide structured context, allowing the model to better understand the relationships between entities and provide more coherent answers.

- Reduced Ambiguity: Knowledge graphs can offer clearer semantics, making it less likely for the model to make incorrect assumptions about a query.

- Up-to-date Information: Knowledge graphs can be updated with new information, ensuring the model is always referencing the most recent and accurate data.

In essence, while large language models can understand and generate human-like text based on patterns in data, they can occasionally "hallucinate" or produce unverified information. Incorporating knowledge graphs offers a more grounded approach, where generated information can be backed by structured, verified data. This hybrid model promises a future where language models are both creative and accurate, synthesizing the best of both worlds.